Researchers at Intel Labs developed a system capable of digitally recreating a scene from a series of photos taken in it.

Called Free View Synthesis, the system uses multiple steps, including traditional algorithms and neural networks. The result is 3D geometry, including colored texturing, which the system can use to synthesize new views in the scene- views not present in the input images.

The input images are not labeled or described, they’re just real photos taken within the scene.

- Step 1: the open source COLMAP “Structure from Motion” (sfm) algorithm is used to determine the camera position of each source image, as well as get a rudimentary point cloud of the scene

- Step 2: COLMAP’s multi-view stereo (MVS) algorithm is run on each source image to generate a basic Point Cloud reconstruction

- Step 3: A mathematical technique called Delaunay triangulation is used to generate a proxy geometry Mesh

- Step 4: a shared convolutional neural network (the image encoder network) encodes the key features of each source image

- Step 5: the key features are mapped into the Target View using the depth map derived from the proxy geometry generated in Step 3

- Step 6: a recurrent neural network (the blending decoder network) aggregates the features into a single blended output frame

If you want a full technical breakdown of the process, you can access the full research paper PDF.

These steps together currently take a significant amount of time, so it’s not possible to do it in real-time. That could change in future, as more powerful inference hardware emerges and the approach is optimized.

Next Level Experience Sharing

This isn’t a new idea, or even the first attempt. But Intel’s algorithm seems to produce significantly more realistic results than the previous state of the art- Samsung’s NPBG (Neural Point-Based Graphics), which was also published recently.

Unlike with previous attempts, Intel’s method produces a sharp output. Even small details in the scene are legible, and there’s very little of the blur normally seen when too much of the output is crudely “hallucinated” by a neural network.

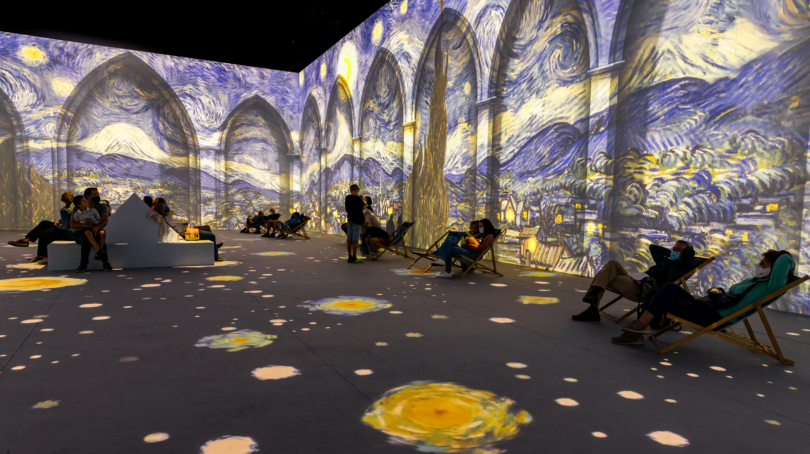

We already digitally share interesting places we visit. Billions of people upload travel photos & videos to social media platforms, either to show friends or for public viewing.

If the techniques used in this paper make can their way out of a research lab and into mainstream software, you’ll one day be able to share not just an angle or clip, but a rich 3D representation location itself.

These scenes could of course be viewed on traditional screens, but stepping into them with a VR headset could truly convey what it’s like to be someplace else.

Quelle:

https://uploadvr.com/intel-labs-free-view-synthesis/