The Movement SDK for Unity developer guide provides detailed information on Body Tracking for Meta Quest and Meta Quest Pro, and Eye Tracking for Meta Quest Pro, FAQs, API reference, and other information to help you understand and use Movement SDK for Unity.

Overview

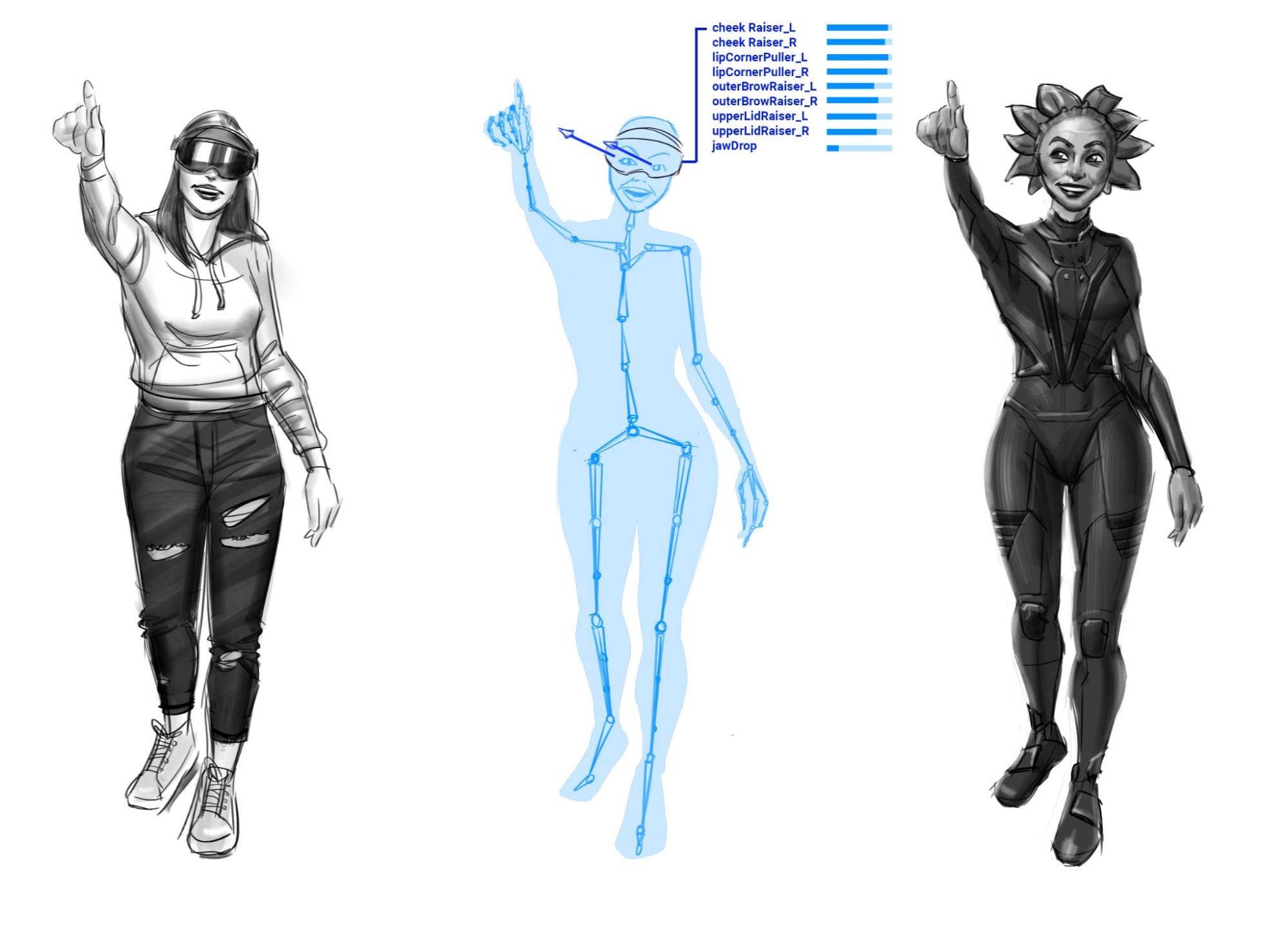

Movement SDK for Unity uses body tracking, face tracking, and eye tracking to bring a user’s physical movements into the metaverse and enhance social experiences. Using the abstracted signals tracking provides, developers can animate characters with social presence and provide features beyond character embodiment.

Prerequisites

To use Movement SDK for Unity, the following is required:

- A Meta Quest or Meta Quest Pro headset for body tracking

- A Meta Quest Pro headest for face tracking and eye tracking

- Meta Quest OS 46.0 or higher

- The latest version of the Oculus Unity Integration SDK

- A Unity project with standard configuration settings and OVRPlugin configured to use OpenXR as its backend

Body Tracking

Body Tracking for Meta Quest and Meta Quest Pro uses hand controller and headset movements to infer a user’s body poses. Body poses are tracked as an abstract series of points which the Body Tracking API maps onto a set of body joints for a humanoid character rig.

For more information, go to Body Tracking.

Face Tracking

Face Tracking for Meta Quest Pro relies on inward facing cameras to detect expressive facial movements. These movements are categorized into expressions that the Face Tracking API represents through a set of 63 blendshapes which apps can use to implement face tracking.

For more information, go to Natural Facial Expressions.

Eye Tracking

Eye Tracking for Meta Quest Pro detects eye movement and enables the Eye Gaze API to drive the eye transforms for a user’s embodied character as they look around. The abstracted eye gaze representation that the API provides allows a user’s character representation to make eye contact with other users, significantly improving social presence.

For more information, go to Eye Tracking.

Quelle:

https://developer.oculus.com/documentation/unity/move-overview/